Last week I was having all manner of problems setting up a replica of my master authentication server. After a significant deal of effort I believe I have solved these problems, though I won't know for sure without further testing, which I will be unable to perform anytime soon due the beginning of the Fall semester, which is Tuesday.

Getting this working has been a saga of nearly epic proportions. I'd long suspected that my replica problems were due to latent Kerberos problems on the master, which occurred in the initial stages of its installation and setup, and I still believe this is indeed the case. Loathe as I was to rebuild a seemingly perfectly working master, I was considering trying it when circumstances beyond my control, and I believe completely unrelated to the problems I was having, forced my hand. Towards the end of the week the master server committed suicide.

I was doing some work on the server when I noticed that the system drive was inexplicably full. Odd, considering that this is a 20GB partition, and the system, apps, and my files should have been using no more than 6GB. Where had all that space gone? Some quick investigating led me to the folder /var/spool/atprintd which was hogging about 10-15GB of disk space. (I'm recalling all this from memory, so details might not be perfectly accurate.) A surely related symptom of all of this was that, whenever I went to set print preference for computers in Workgroup Manager's "Computers" list, I'd get a spinning wheel, but never a graphic or access to the control. Didn't think much of 'til I discovered this print queue spooling dangerously out of control. Still, no big, I figured, and I deleted the spool files, and deleted the queue (I wasn't even using it) and rebooted the machine. Problem solved, right? Wrong.

After reboot the master server formerly know as "Open Directory Master" listed as it's role "Connected to a Directory System" in Server Admin's Open Directory settings pane. Huh? Exactly what Open Directory System was it connected to? Itself, apparently, as Directory Access showed it as being connected to 127.0.0.1, which is what it should be set to if it's a master. For some reason the server had somehow relinquished its role as master and handed it over to none other than itself. Talk about schizophrenic. Now, I have no idea — nor time to figure out at this point — how all this role stuff is managed down in the guts of the system. In fact, with the semester looming and all the replica problems I'd been having, I took this as a sign that maybe it was time to rebuild the server. And that's what I did.

Mac OS X Server, for all its relative ease-of-use, sure is amazingly finicky about a great many things when it comes to installation and initial setup. And once it's set up wrong, it seems the only way to reset all its various databases and services is to wipe it clean and start from scratch. There are settings (like the IP address) that get so ingrained into every database and config file that it's nearly impossible to find every instance and change it. Kerberos, the LDAP database, and UNIX flat files all need to accurately know about things like the IP address and the Fully Qualified Domain Name of the server before you start any services. And DNS had better be working perfectly somewhere on the network you're OS X Server is on, and it better have a perfect record for your server or you're going to just be screwed. Maybe not so screwed that you can't fix the problem, but probably screwed enough that rebuilding your server from scratch is the preferable option. I believe what caused all my problems — and I haven't proven this yet, but I believe it to be so — was a dot. That's right, a dot. One, single, stupid little dot I forgot to enter in my reverse DNS table. You know the one. You've surely forgotten it yourself at some point. The entry should look like:

34.1 IN PTR server.systemsboy.domain.com.

But instead you wrote:

34.1 IN PTR server.systemsboy.domain.com

Ooops! See the difference? No dot. No period at the end of the sentence. Kids, punctuation counts in DNS. And DNS counts on Mac OS X Server. Big time.

So now you're fucked and you don't even realize it. You go and you build your server, and things seem fine, and then all of a sudden the simplest of things — building your replica — suddenly don't work, and you've no idea why. There are no error messages, no warning dialogs. Things just don't work.

There were some other facts that I learned about over the course of this saga as regards the finickiness of Mac OS X Server. In Tiger Server 10.4+, f you want to host a replica, Kerberos has to be running properly. The Apple folks will tell you that once you set your server to "Open Directory Master" Kerberos will just set itself up and start automagically, which it will, as long as your DNS is set up right. Otherwise it will not do anything, and, as per the manual, you can start it up later, by hand, in Server Admin. But if you've already gone and done a bunch of other Kerberos-dependent stuff on your server — stuff like adding users, or creating a replica — and you then try to Kerberize your server, you're most likely quite fucked. And there's no easy way out. You're best bet is to rebuild the server from scratch. The alternative is to scour the numerous databases and config files in an attempt to correct the problem. But you'll never be sure it's right. So you rebuild the server.

I rebuilt the server.

It wasn't so bad. I'm getting really good at batch importing users into Open Directory (which will be the subject of a later post). The worst part was that the Windows machines all had to be rejoined to the new server by hand. Otherwise, with a proper DNS configuration — and, just to be on the safe side, with the master and the replica added to the /etc/hosts table — rebuilding the server was as easy as it's supposed to be. And now I have a server in which I have a great deal more confidence. Kerberos, in particular, seems to be working properly now. The weird error messages and inability to authenticate via Kerberos using the OD admin user are finally gone.

If I may make an aside to wax bitchy here for one moment: It seems to me that Apple needs to make a much bigger deal of all these dependencies. Proper server operation — especially Kerberos configuration — is heavily reliant on proper DNS configuration. I've known that for a while, mostly from forums and admin sites rather than from Apple's server documentation. But also, replication is dependent upon proper Kerberos configuration, which I never knew until I scoured the forums. I don't even use Kerberos, so I never worried about it being configured properly. And back in Panther you could easily replicate without it. That's changed and it would be nice if someone hollered about it quite loudly in the manuals. Also, it would be really great to have a way to put everything back to spec. FQDN assignment is done automatically by the server, and you no longer have the option to do it by hand. This happens quite early in the boot process and is completely dependent upon proper DNS. But if you screw up your DNS, there appears to be no safe way to go back and redo the FQDN assignation. Everything gets hosed by one little mistake. I'd like a button that resets everything on the server — Kerberos, FQDN, IP address and OD Database — back to the settings just after a clean install, and then brings you to the Setup Assistant to redo all the settings. At least then you wouldn't have to reinstall everything. (You do realize, of course, that there is no "Archive and Install" featurefor Mac OS X Server. So any install requires the multi-megabyte software updates, and the reinstallation of any users, shares, computers and the like. Which is a big, fat, nasty pain.)

Okay, so the burning question: What about the replica? Did the rebuild yield a usable replica? The answer is not pretty: I think so.

Once I had my master server rebuilt (and cloned for easy restore!) I tried the replica. Replication seemed to go fine, but then, it did before too, so that wasn't necessarily reassuring. Once the replica was built I pulled the plug on the master. This time, things were even worse than before. No authentication was able to take place on the clients. They just didn't see the replica. But why?

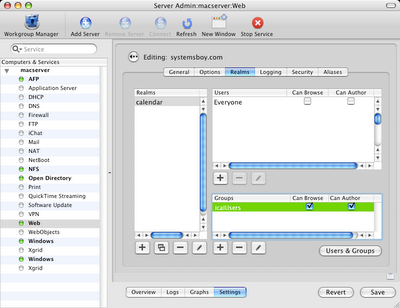

One of the things I learned through all of this is that it's the client who maintains the list of replicas. It gets this list from the master OD server when it binds to said server — i.e. when you open Directory Access and set it up — and it probably also refreshes the settings here periodically, perhaps when mcx cache is refreshed, but I'm not really sure. You can see all these settings in one particular preference file, called DSLDAPv3PlugInConfig.plist, located in /Library/Preferences/DirectoryService. One of the things you'll see in this file is the IP address of your master server, and if there are any replicas, they will be listed as well. Checking this file on my clients showed that they had not gotten the replica info from the new server. They had no idea about the replica. So I plugged the master back in, and re-bound a client machine. It got the replica info. I unplugged the master again, and after a few minutes, my client could again authenticate to the replica. It worked! Finally!

Now all of this occurred on Friday at around 9PM. I'd worked about 52 hours by that point. Testing the replica on a larger scale, and on Windows in particular, would have required effort I was no longer capable of nor willing to exert — i.e. un-joining Windows systems from the master, removing them from the computer list, rejoining them, pulling the plug, testing the behavior, and the like, all of which would have taken at least another hour or so to do properly. And whether it worked or not, I'd still have to go rebind every machine one by one the following week, just to be safe. So I quit. And that's why, when you ask me, "Did the replica work?" I can only answer, "I think so."

To qualify: I think we have a winner. It seems to work on the Macs, and I think it will work on Windows (though how well it works on Windows, and if it works like we hope it does is anyone's guess). The previous difficulties seem to have been caused by Kerberos-related problems on the master OD server, but I haven't thoroughly tested it yet. And if it doesn't work, then that's that. We'll probably give up on the whole replica idea for the semester, since the only way to test it is to effectively bring down the network, and I'm not so willing to pull plugs when school is in session. So next week I will test a Windows machine if I can find a good time to do it. Either way, I will be rebinding all Macs and Windows machines to the new server in the hopes that replication is indeed working. But if there's no time to test it, we may not know for sure until the master server dies. Sure. It's a little scary. But I've seen worse.

One last thing: I did have the strangest problem with my latest master/replica setup during testing. After pulling the plug on the master, and then plugging it back in, I was unable to authenticate to the master as the directory admin user from WGM, Server Admin or ARD. I was able to ssh in, but I could not reboot the machine from the shell because sudo from both the directory admin account and the local admin account would not accept the root password. I was totally locked out. The only thing I could do was hard reboot the server by holding the power button on the machine. Fortunately, after doing so, the server began behaving normally again, and root access was again right with the universe. Sure did make me nervous though.

Anyway, thus ends, essentially, the saga of our replica, at least for now, as well as, for the most part, the saga of Three Platforms, One Server. We're now in the final stage of the project: going live. Class begins on Tuesday, and we'll get to see how our master authentication server holds up under load. If disaster ensues, expect more posts on the subject. If all goes well — knock wood with me, people — I will post a final round-up article and this series will be concluded. Also, if I get a chance to more thoroughly test my new replica I will post the results either here or in another article.

Okay, everyone. Have a great school year!