Recently, one of our producers needed to cut a 20+ minute planetarium show down to a 3 minute trailer. So he needed a way to edit an image sequence comprised of extremely large, non-standard frames. We decided to use Final Cut Pro for the editing software, but Final Cut is decidedly not built for working far beyond the confines of its presets. Nevertheless, it is a flexible enough tool to get the job done, with a little effort and a fast computer. Here's a blow-by-blow of what we did.

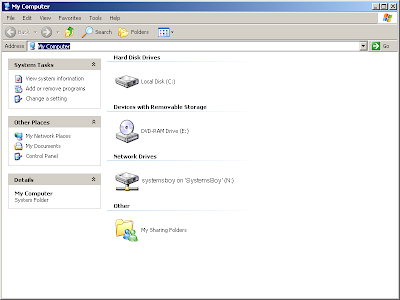

- Make Reference Movies of Frames The first step in the process was to make reference movies of the near-30,000 or so frames. Nothing about this entire process was straightforward, and this first step was certainly no exception. Attempting to import the entire range of frames invariably crashed Quicktime. But we were able to work with a subset of frames. So we ended up breaking the frames into folders of 5000. Of course, trying to move that many large files in the Finder would overwhelm the GUI, causing it to hang for vast stretches of time, so we moved the files via the command-line. This went exceedingly quickly. The Finder still really needs some help handling large groups of files apparently. But in the end, thanks to Mac OS X's UNIX core, we had six folders full of images, and each folder was opened as an image sequence in Quicktime, then saved as a reference movie.

- Make DV-NTSC Movies from Reference Movies Since we were going to be editing this material, we wanted to get it into a good, edit-friendly CODEC. We chose DV-NTSC since it looks pretty good and is really easy to work with in Final Cut. Making our DV-NTSC movies actually went pretty well. Quicktime has done better at keeping current with the latest hardware capabilities than has the Finder. And, once we'd broken our image sequence down a bit, Quicktime made relatively short work of exporting them to DV-NTSC. And, blessedly, Quicktime was able to load and export multiple movies at once and take full advantage of our eight cores to process them.

- Import and Edit at DV-NTSC Quality Well, okay... This was the easy part... In fact, making this easy was the whole point of all those other steps.

- Create Custom Sequence Settings DV-NTSC was nowhere near the resolution we needed for our final product. We just bumped down to it in order to make editing more feasible. Eventually we wanted to output this puppy back out to gigantic frames once the editing was done, so we needed a custom sequence preset that matched the properties of said frames. Later we'd use this preset to create our final, high-res output.

- Create Offline Project with Custom Settings Final Cut has a nifty feature called Media Manager, which allows you to consolidate your media and get rid of unused clips in order to reclaim disk space. It also can be used to conform from an offline — or low quality — version of your project to an online — or high quality version. Which is what we did. In Final Cut, we went to the Media Manager (under the "File" menu) and chose "Create offline" in the pulldown in the Media section where it says "media referenced by duplicated items."

- Reconnect Media to High-Res Source

Once we had our offline project, we needed to re-associate our DV-NTSC clips with the original, full-quality media, i.e. our reference movies. This was fairly easy to do: simply right-click the media and choose "Make Offline..." from the menu. Then right-click the movies again and choose "Reconnect Media..."

When prompted for the clips, we chose "Locate," and then selected our high-quality material. Be sure to uncheck "Matched Name and Reel Only" when selecting the high-res clip.

- Export Back Out to Frames with Compressor Once the movies were reconnected to the original source material, we needed to output back out to the original source type, which in our case was a TIFF sequence. There are a few ways to do this, but we had the best luck (i.e., the fewest crashes and slowdowns) sending the job right from Final Cut to — believe it or not — Compressor. This is certainly the first time in my storied history that I can say Compressor was the right tool for the job, but indeed it was. It knocked it out of the park. We, of course, had to set up a preset in Compressor that exported TIFF frames and apply that to our job. But once that was done, Compressor did it's thing and did it well.

In the end, what we got was a virtually lossless edit of our show. Because we used the original TIFF frames to render out our final trailer cut, and because Final Cut and Compressor shouldn't need to recompress those frames unless they've been altered somehow (which they only were in spots where effects or transitions occurred), our final output looks just as good as the original, even though we edited in the edit-friendly DV-NTSC CODEC. Which is exactly what we were going for.

There were a lot of hiccups along the way. One thing we noticed is that Final Cut does not take advantage of multiple processors, though, blessedly, Quicktime and Compressor now both do. Also, the Finder is still pretty damned abominable when it comes to dealing with a very large number of large files, which is a real shame since it's main job is file management. Quicktime, too, had some performnace issues with the large number of files. These things certainly slowed us down a bit. But, with some ingenuity and tenacity we were able to accomplish a pretty difficult task in a relatively short time. It was pretty cool, and now, if something like this ever comes up again, I'll have a process for it.

I want to say, too, that this is just the sort of challenge SysAdmins look for. Or at least SysAdmins like me. It's projects like this that make me happy I am where I am in my job and my career.